Part of the Endoscopic Vision Challenge¶

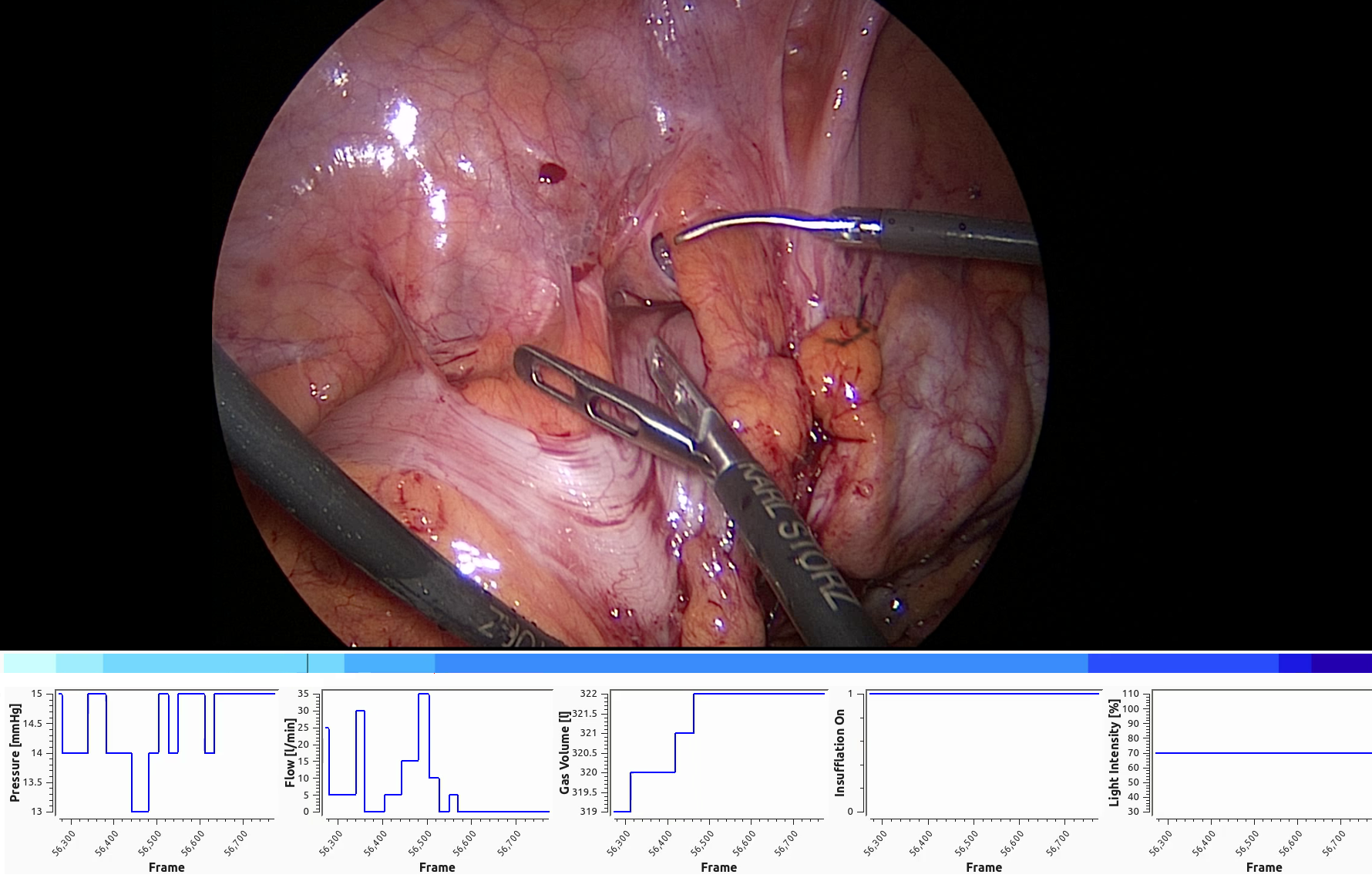

Analyzing the surgical workflow is a prerequisite for many applications in computer assisted surgery (CAS), such as context-aware visualization of navigation information, specifying the most probable tool required next by the surgeon or determining the remaining duration of surgery. Since laparoscopic surgeries are performed using an endoscopic camera, a video stream is always available during surgery, making it the obvious choice as input sensor data for workflow analysis. Furthermore, integrated operating rooms are becoming more prevalent in hospitals, making it possible to access data streams from surgical devices such as cameras, thermoflator, lights, etc. during surgeries.

The sub-challenge “Surgical Workflow Analysis in the SensorOR” focuses on the online workflow analysis of laparoscopic surgeries. Participants are challenged to segment colorectal surgeries into surgical phases based on video and/or sensor data. Participants are encouraged (but not required!) to submit different results based on video frames, sensor data and also their combination. This novel kind of challenge investigates whether additional sensor data from medical devices improves recognition compared to mere video analysis and whether algorithms can handle the variations in colorectal surgery.